Artificial Intelligence (AI) has become extremely popular in recent years and can be seen nearly anywhere. AI is used in toys, art, music, medical tools, grocery stores, and more. The CEO and co-founder of Sangwa Solutions, Chris Earle, defines AI as, “a machine that can learn. Generally, people have this notion that there is something called ‘The AI’ which would be some sort of singularity of AI like, the AI ‘overlords.’ But I think of it more as a facet of technology.”

How did we get to this definition? There has been a long and extensive history leading to the evolution of the prominent role AI plays in our society. AI is a technology that is extremely versatile and offers many opportunities. Here is a brief history of artificial intelligence;

The History of Artificial Intelligence

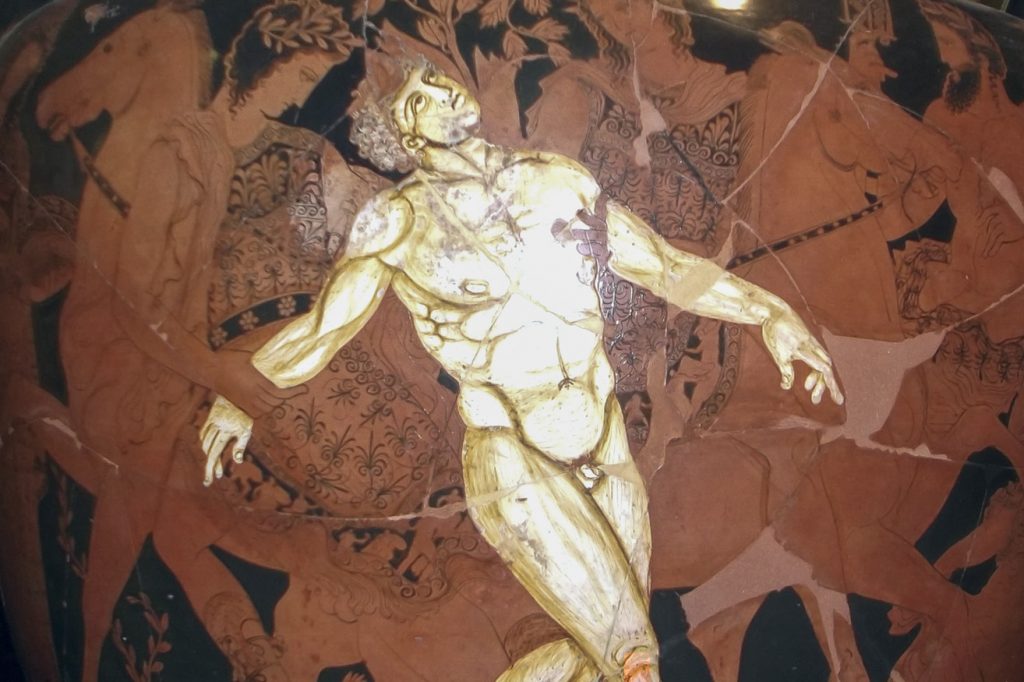

Just like many inventions, the idea of AI can be dated back to prehistoric times. The concept of artificial intelligence can be dated back to ancient Greece or Egyptian engineers. In historical records, there are stories about inanimate objects gaining human intelligence.

Talos is one of the first references to the creation of AI or robotics, which was a bronze machine made by greek gods, also known as the ‘killer robot’ or ‘the man of bronze.’He was known for his demise and described as an“embodiment of technological achievement and divine power intertwined in a single mythic being.” This ancient Greek myth encompassed the most advanced modern technology.

It was not until the 20th century that myths of ancient greek gods came into modern existence. The first reference to the word AI appeared in the 1921 play titled ‘Rossum’s Universal Robots.’ The play initiated the beginning of the general population associating robots as the only form of artificial intelligence as it depicts a factory that creates artificial people or robots. The play introduced the word ‘robot’ to the English language translated from Czech. Robots, artificial intelligence, and machines were extremely popular in science-fiction media like The Wizard of Oz’s ‘tin man.’ It wasn’t until late 1950 that the concept seemed feasible in real life.

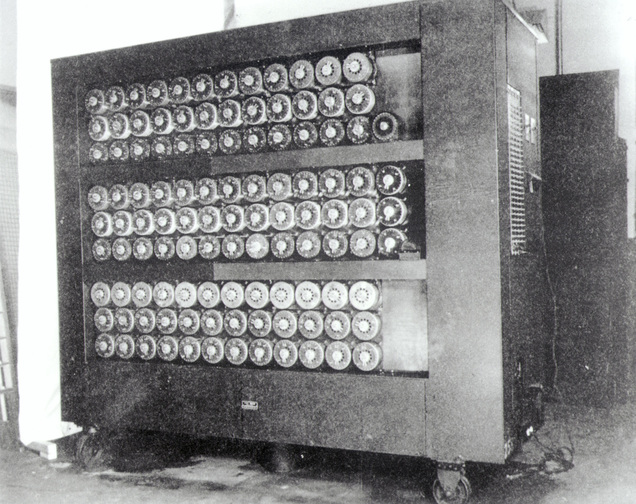

In 1950, mathematician Alan Turing posed the question, “can machines think?” In his paper, Computing Machinery and Intelligence, he explored the concept of machines having the ability to think and problem solve the same way humans do. Unfortunately, at that time Turing thought of a concept too advanced for the current computers. Computers could only execute commands and not store them, computing was also extremely expensive during that era. Luckily, mathematicians like Turing, along with philosophers and scientists, examined the possibility of machines having artificial intelligence.

5 years later in 1955, Allen Newell, Cliff Shaw, and Herbert Simon designed the program Logic Theorist to imitate human behavior – the prototype of AI. At this point, it became apparent that machine thinking was possible. That same year, the term ‘artificial intelligence’ was coined and AI began to flourish. Computers started to have major advancements – their speed and storage increased while their costs decreased.

Perceptron was the first network developed by Frank Rosenblatt in 1957. The New York Times described it as the “embryo of an electronic computer that [was expected to] be able to walk, talk, see, write, reproduce itself and be conscious of its existence.” Essentially, Perceptron was created to connect web points to make simple decisions.

The first programming language, Fortran, was also created that year. The more popular programming language still used today, Lisp, was developed in 1958. In 1961 General Motors implemented an industrial robot to their assembly line. It performed repetitive and dangerous tasks, such as welding. There were great achievements in nearly every sector and great interest in these advancements from the government. However, the flourish of AI came to a halt in 1974.

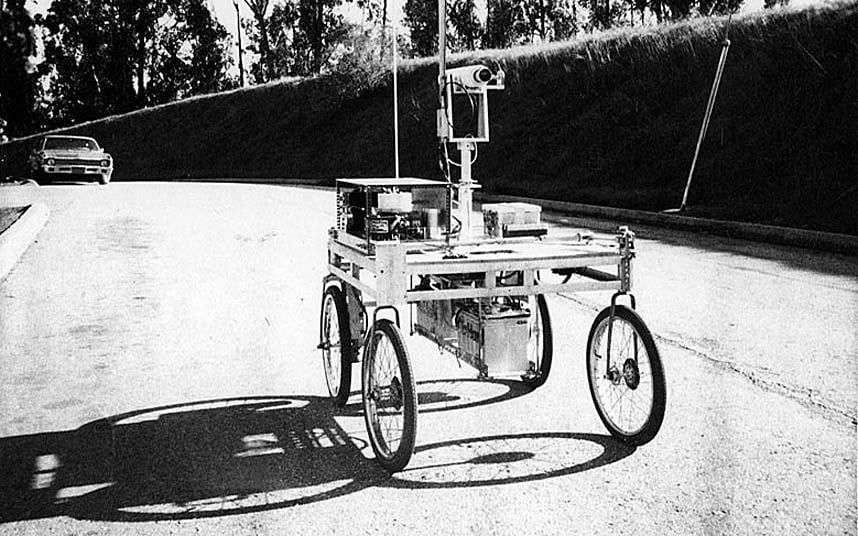

1974 to 1980 was known as the ‘AI Winter’ because interest and funding for artificial intelligence diminished. Although extremely difficult, advancements were still made. The Stanford Cart was created and embraced by students and researchers. The cart was a ‘moon rover’ to be controlled from the earth. It learned to follow a white line on the ground steadily and could be tracked (watch it here).

This rather bulky robot was one of the first robots created by Stanford University and helped shape the future of robotics. Despite the initial enthusiasm revolving around AI diminishing, there were still institutions supporting and funding research to make further evolutions possible.

After the AI winter, investment began to grow again. The Japanese government invested $400 million between 1982 and 1990, with the hopes to revolutionize computing, and AI specifically. This investment inspired many young engineers and scientists to be engaged with the sector.

There have been numerous amounts of building blocks that have contributed to the knowledge we have on AI now. With these major milestones, many predicted that AI would soon have the capabilities to take over any job. There are limits to what AI can do but, amazingly, the versatile uses it has. It has been extremely useful within many current industries. Read more about what AI looks like now here.